ISO 20022 — An enabler for AI (and BI)

ISO 20022 — An enabler for AI (and BI)

ISO 20022 — An enabler for AI (and BI)

October 20, 2023

Part 1 — Setting the Stage

In the world of finance, data is the lifeblood. It fuels decisions, drives innovations, and secures the flow of money. Amid this data-driven landscape, ISO 20022 emerges as the universal language for financial messaging. It doesn’t just provide a standardized syntax, it delivers a rich semantic context that transforms the exchange of financial information into a structured, insightful journey.

On one hand, ISO 20022 empowers operational efficiency and risk mitigation. It enables faster transactions, reduces errors, and slashes costs, particularly in the domains of financial crime compliance and payment investigations. On the other hand, it paves the way for revenue-generating opportunities by offering a wealth of additional data points. These data points will allow us to delve deep into the intricacies of transactions, ultimately revealing a sharp view on the money flows and the actors involved.

The global adoption of ISO 20022 is on the rise, driven by international working groups, cross-border networks, market infrastructures, and financial institutions.

One of the remarkable attributes of ISO 20022 is its openness. It’s accessible to all, fostering innovation and interoperability across a spectrum of contexts, from domestic to cross-border networks, and across various rails, spanning high and low-value payments, inter and intra-banking transactions, within financial messaging but also blockchain networks (e.g. Ripple, XDC), potentially serving CBDCs, too. ISO 20022 also sets the stage for expansion which could go far beyond payments. It encompasses message types and processes that can potentially cover all aspects of finance, including securities clearing and events or trade finance, to name a few.

While much of the focus currently centers on the transactional services facilitated by this standard, little is said about the equally critical analytics services that harness the wealth of data collected.

This article explores ISO 20022 from a data analytics perspective.

Part 2 — The Data Analytics Opportunity

Embracing a New Data Reality

ISO 20022 revolutionizes financial transactions by allowing data to travel seamlessly with the money. This feature has the potential to revolutionize decision-making processes. By structuring data atomically and embedding a rich semantic context within each data element, ISO 20022 ensures precision and deep insights.

Analytics as a Multiplier

Data processing typically involves two concurrent types of services: transactional services, which deal with the immediate processing of data according to predefined rules, and analytics services, which add value by providing insights and in-depth analysis of the data. These two layers work in tandem to optimize data utilization and decision-making within a system.

Transactional services are the domain of business rules. They dictate actions based on specific conditions — if you get A and B, do C. These rules are created by experts, implemented by software engines, and the job is considered complete once a transaction reaches its destination, with reporting and audits taking place at a later time.

On the other hand, analytics is all about learning from data, a process that can be undertaken by humans through Business Intelligence (BI) or via Machine Learning (ML), a subset of Artificial Intelligence (AI). ISO 20022 ticks many boxes for data analytics as a strategic data source: it offers data that is rich, big, real-time, and standardized.

While messages can be viewed as discrete information units, their true value emerges when they are collected and aggregated. It is the data accumulation that reveals precious context, being for descriptive or predictive analytics.

When messages are grouped into business transactions (with or without a unique identifier), they unveil the entire path taken by a payment, the completeness of its information, its timing, or its back-and-forth error corrections, to name just a few aspects. Aggregating data at the party level sets the stage for calculating risk factors and evidence suspicious behaviors. Data collections at the business or company level provide the foundation for compliance and risk reporting, while the granularity of ISO 20022 data is ideal for efficient anonymization and targeted cross-bank information exchange.

Data accumulation is also the key to capturing representative patterns. It aids in detecting attributes that show correlation with specific targets of interest, such as predicting suspicious transactions or payment delays. It can also uncover outliers in the data flow, whether they are genuine anomalies or valid exceptional behaviors requiring attention. In a BI workflow, data would be used to build reports, conduct investigations, and generate insights. In an AI workflow, the data would be used to automatically identify anomalies, streamline decision-making processes (e.g. sanctions), extract trends (e.g. purchase habits), make forecasts (e.g. account movements), or perform clustering (e.g. grouping similar accounts). AI enables us to uncover complex or even unknown patterns that might be impossible to discern with the human eye and for which no scenario has been established yet. It does so by systematically learning to minimize errors based on large volumes of data.

Part 3 — The Challenge of Evolving Semi-Structured Data

The Complexity of Semi-Structured Data

Humans and machine learning algorithms excel at analyzing two types of data with the current tooling: unstructured (such as text, images, and sound) and structured (such as tables). However, ISO 20022 introduces a unique challenge as it primarily consists of semi-structured data.

Precisely, ISO 20022 data can be visualized as a vast, complex tree-like object with tenths of depth levels and hundreds of thousands of contextualized attributes (!). In fact, there are almost as many attributes as there are words in the English dictionary. While this level of detail allows for precise descriptions of data items, it also poses a real challenge. Neither the human eye nor typical machine learning tools are accustomed to processing such a complex structure as a whole.

One naive approach is to cherry-pick attributes that seem relevant while discarding the rest. Another approach involves building endless queries or endpoints to capture useful content iteratively. However, both of these methods are far from efficient.

The Persistence of Unstructured Content

Despite the structured nature of ISO 20022, some data elements may still tolerate unstructured content. Notably, fields like postal addresses <AdrLine> or remittance information <Ustrd> can still include plain human text in certain dedicated areas. These data items will require text-processing engines to encode this information for machines. Furthermore, even if these text elements are presented in a structured form, such as postal addresses with sub-fields, their textual nature can introduce ambiguities into the processing. These may include typos, language or regional variations, and other challenges that arise when working with unstructured text.

The Multi-Dialect Challenge

Another challenge of ISO 20022 is that it’s far from being a homogenous language. Depending on the market, payment type, or the institutions involved, inconsistencies may appear in terms of the message types used, the set of attributes populated, and the content of those attributes themselves. While ongoing efforts [4] aim to standardize practices within specific networks, the inherent flexibility and wide range of use cases offered by the standard ensure that these dialects will persist in the long term. Moreover ISO 20022 is a living language, evolving with time. New attributes will be proposed and added to the standard over time as new use cases emerge. The dictionary of possibilities will continue to expand.

Visualization

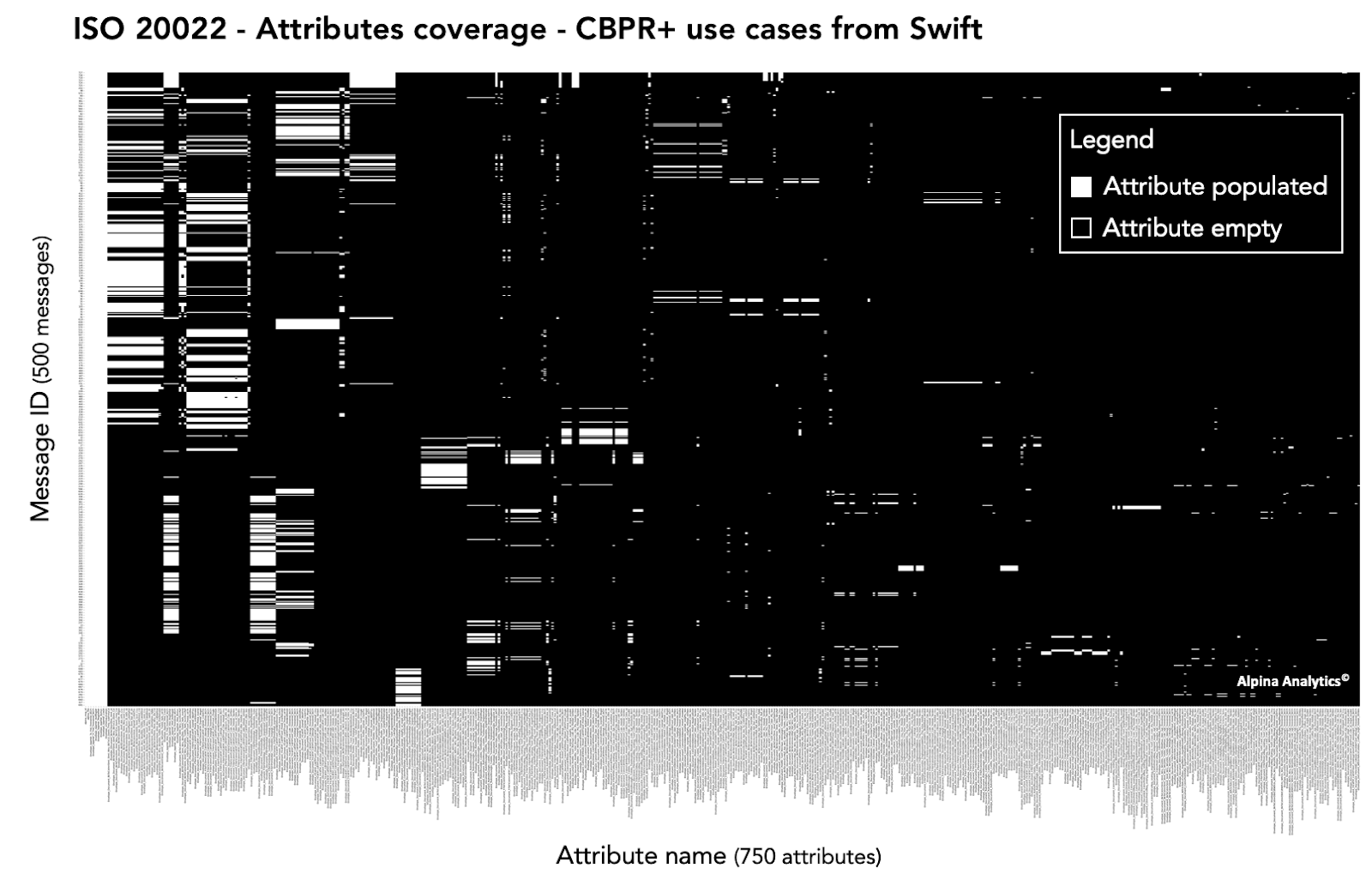

As a result, the scope and depth of ISO 20022 attributes can be staggering. To illustrate this, let’s explore the attributes coverage within the CBPR+ use cases message dataset [1]. This dataset consists of 500 ISO 20022 messages of various types of pain, pacs, and camt, see full list in [2]. Looking into those messages reveals a list of 750 attributes utilized.

The visualization below paints a vivid picture of the rich tapestry of ISO 20022. While a concise set of 7 attributes (all originating from <AppHdr>) finds a place in every message, the majority of attributes exhibit sparse coverage. In other words, they appear in only a select few messages. This demonstrates the extensive range of semantic descriptions employed to convey data items.

While this diversity allows for precise contextualization tailored to specific use cases, it poses a significant challenge for data analytics. Most analysts are accustomed to working with tables containing fewer than 100 attributes. The wealth of attributes in ISO 20022 can be a double-edged sword, offering depth but also requiring advanced tools and techniques to navigate this intricacy.

Part 4 — The Solution

Hence, a meticulous ISO 20022 data management strategy is essential, encompassing specialized data processing, storage, and access methods that extend beyond traditional message processing. In particular, it needs to deal with semi-structured data, evolving schemas, rich semantics, and complex processing, while allowing for big data batch and real-time processing scenarios, all this by taking into account the sensitive nature of the data (personal identifying information).

4.1 Processing

Effective data analytics, especially machine learning, requires access to the complete data content, with no data loss. This ensures that valuable patterns are not missed and that we can avoid costly joins across business divisions that may have already cleaned the raw data. Holistic parsers and extractors can achieve this.

Unstructured text components, like address fields, can be parsed into structured content using advanced address parsers trained on global data that make use of natural language processing (NLP) and machine learning (ML) techniques. The use of large language models (LLMs), can also be used for this purpose [3]. Fully unstructured data can be processed using traditional NLP techniques, including Entity Recognition (ER) algorithms. Address enrichment, such as geolocation data, can be the ultimate extension to allow machine processing using numerical codes (geo-coordinates).

4.2 Storage

Different data models will serve different use cases. It’s essential to enable tabular consumption via SQL on ISO 20022 (without loss of data !) for data analysts and business intelligence tools, while allowing data scientists to easily consume the same tabular data for their machine learning solutions. In combination, one might support those views with more flexible schema definitions (document, key-value and graph) in NoSQL databases.

4.3 Access

You’ll want to provide easy access to this organized data, allowing retrieval at different levels of granularity. Each data item in each message should be easily retrievable for lookups via SQL. Semantic search capabilities, including chatbots [5], can query your well-organized data, leveraging the rich semantics of the ISO 20022 standard. Recent advances in LLMs can also assist in these tasks. On the machine learning side, numerous open-source libraries offer connectors to programmatically source and filter your data of interest.

4.4 Platform

The level of complexity in structure and processing may vary depending on the use case. However, one constant factor is the difference in environment between the training and inference phases of the AI solution lifecycle. Typically, the explorative nature of the training phase contrasts with the constrained context of inference. Cloud platforms offer the preferred environment, allowing for elastic workloads and pay-per-use pricing. This approach enables you to fully exploit the potential of ISO 20022 without compromising information due to platform constraints or processing time. Additionally, you can leverage the latest cloud technologies on the entire data landscape, for data processing, data storage, databases, analytics, and even managed LLMs and generative AI.

In cases where regulation, policies, or architecture require you to process sensitive data on-premises, consider performing anonymization on-premises while offloading the heavy workload to the cloud.

Summary

In conclusion, ISO 20022 is laying the foundation for a wide range of promising analytics use cases. While these opportunities don’t come without their challenges, the current technological landscape, with the synergy of cloud computing, real-time processing, LLMs, SQL and NoSQL databases, and state-of-the-art AI algorithms, sets the stage for a powerful solution. Additionally, the right mix of open-source applications on production-level, highly secure, and scalable platforms can ensure a balanced approach that meets the demands of risk-averse delivery while keeping pace with up-to-date competition.

Next step

All the solutions mentioned in this article are embedded in our products Swiftflow and Swiftagent. Reach out directly to pierre.oberholzer@alpina-analytics.com to discuss your use case or visit Alpina Analytics for more information.

About Us

Pierre Oberholzer is the founder of Alpina Analytics, a Switzerland-based data team dedicated to building the tools necessary to make inter-banking data, including Swift MT and ISO 20022, ready for advanced analytics. We’ve confronted these challenges for years in real-life, high-risk application contexts, and we understand that banks need long-lasting proven expertise to mitigate risks, rather than quick wins that never make it to production.

References

[1] https://www2.swift.com/mystandards/#/c/cbpr/samples

[2] pain.001,002,008,pacs.002–004,008,009,010 and camt.029,052–060,107–110

[3] https://lnkd.in/eR23mFQQ

[4] https://www.bis.org/cpmi/publ/d218.htm

[5] https://swiftagent.alpina-analytics.com/